OpenBot-Fleet: A System for Collective Learning with Real Robots

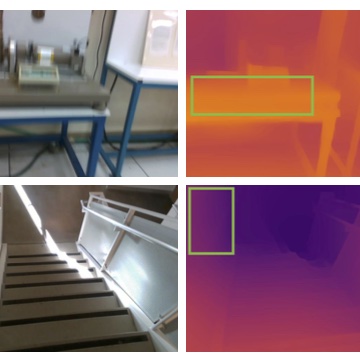

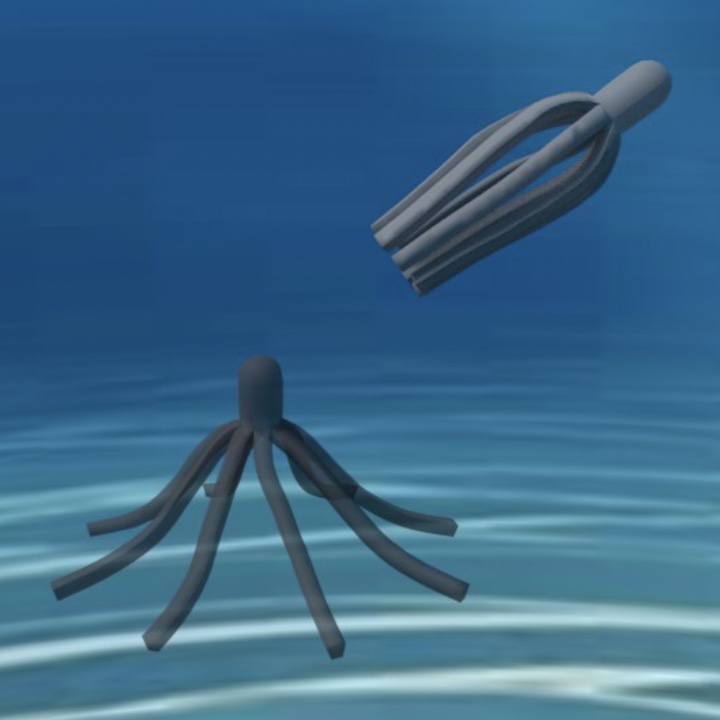

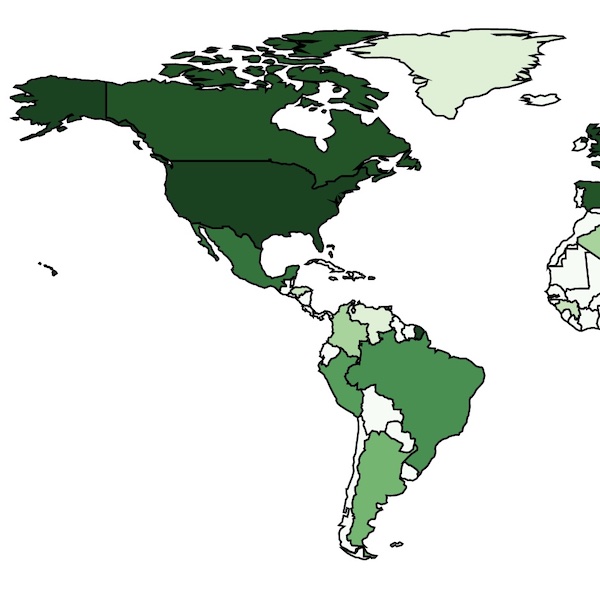

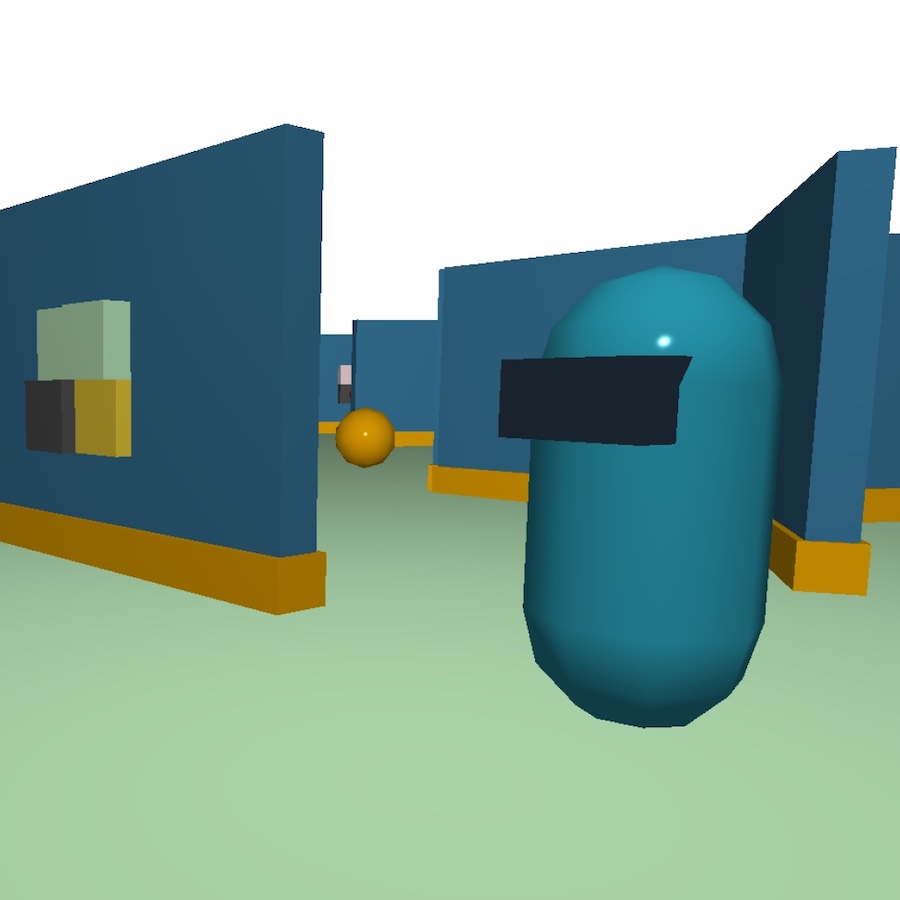

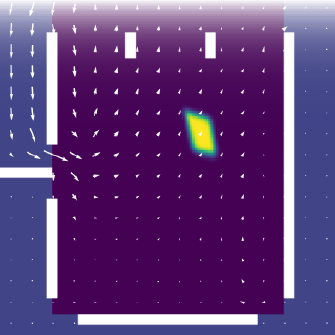

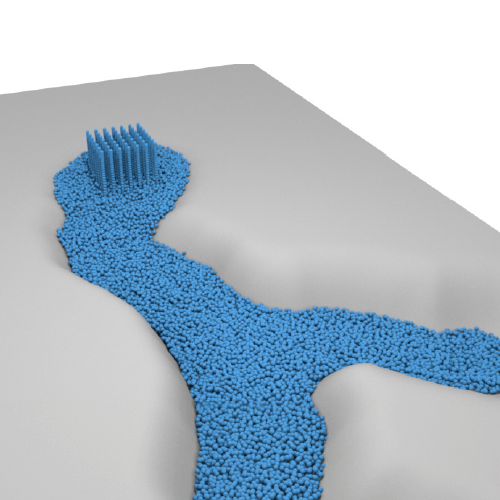

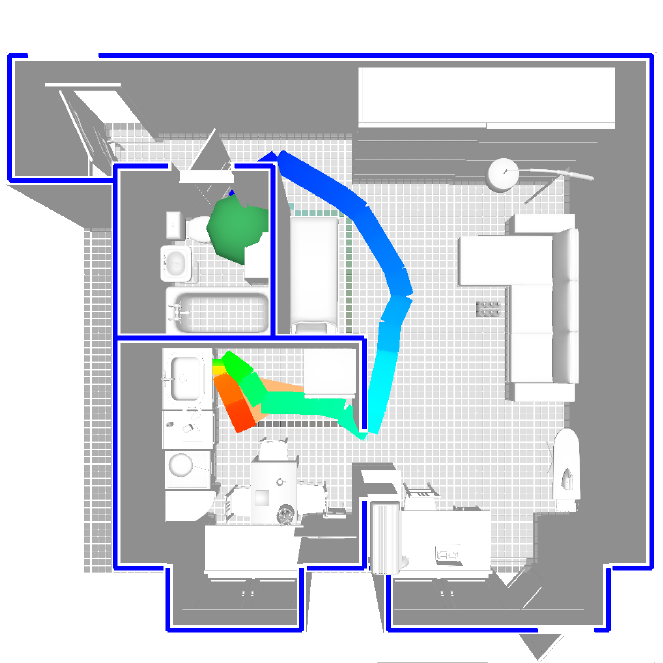

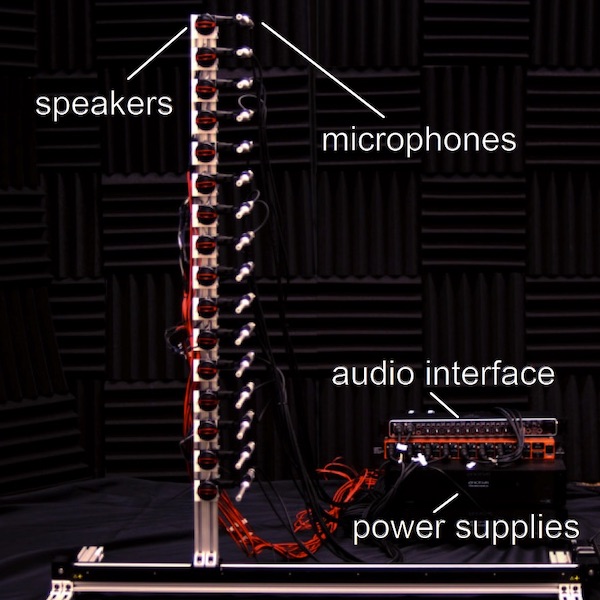

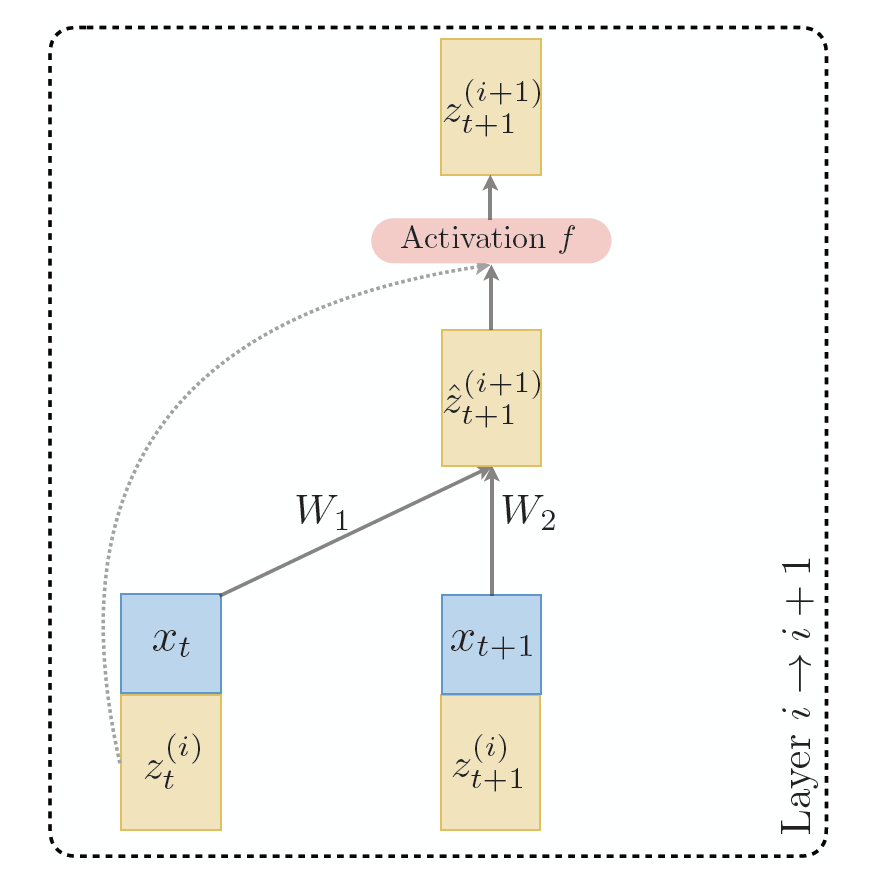

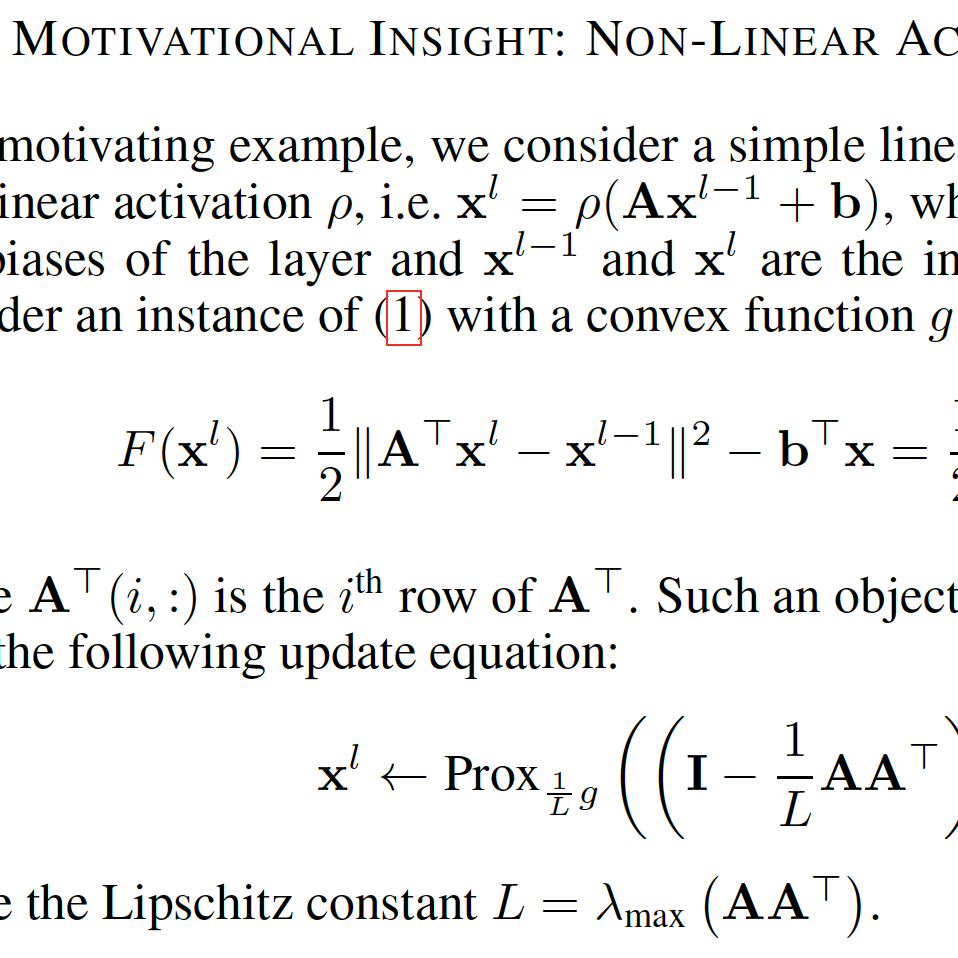

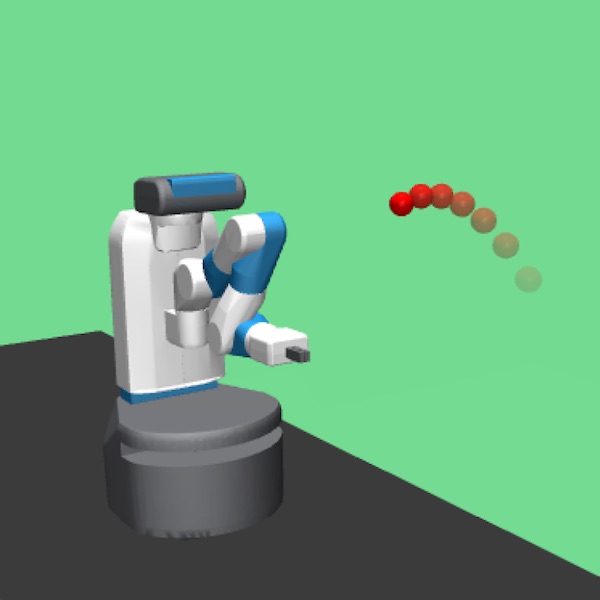

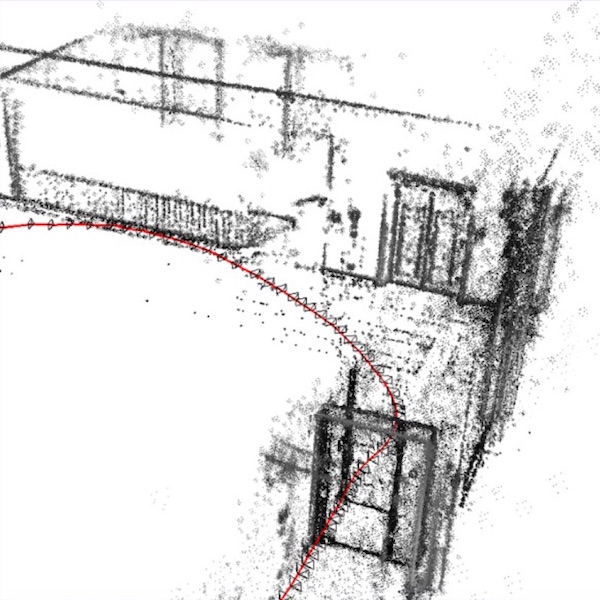

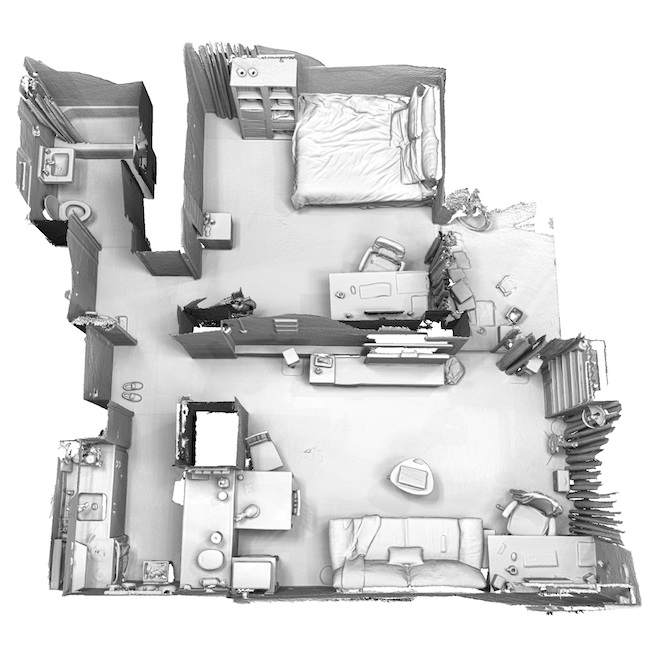

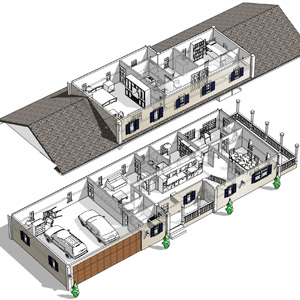

We introduce OpenBot-Fleet, a comprehensive open-source cloud robotics system for navigation. OpenBot-Fleet uses smartphones for sensing, local compute and communication, Google Firebase for secure cloud storage and off-board compute, and a robust yet low-cost wheeled robot to act in real-world environments. The robots collect task data and upload it to the cloud where navigation policies can be learned either offline or online and can then be sent back to the robot fleet. In our experiments we distribute 72 robots to a crowd of workers who operate them in homes, and show that OpenBot-Fleet can learn robust navigation policies that generalize to unseen homes with >80% success rate. OpenBot-Fleet represents a significant step forward in cloud robotics, making it possible to deploy large continually learning robot fleets in a cost-effective and scalable manner. All materials can be found at https://www.openbot.org/.

Publication Page