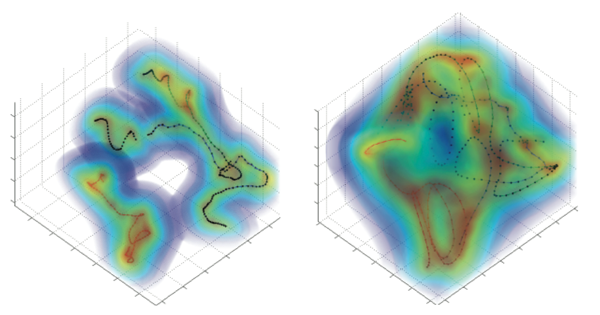

Interactive, task-guided character controllers must be agile and responsive to user input, while retaining the flexibility to be readily authored and modified by the designer. Central to a method’s ease of use is its capacity to synthesize character motion for novel situations without requiring excessive data or programming effort. In this work, we present a technique that animates characters performing user-specified tasks by using a probabilistic motion model, which is trained on a small number of artist-provided animation clips. The method uses a low-dimensional space learned from the example motions to continuously control the character’s pose to accomplish the desired task. By controlling the character through a reduced space, our method can discover new transitions, tractably precompute a control policy, and avoid low quality poses.