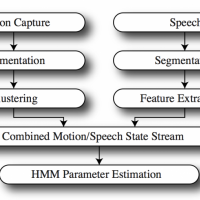

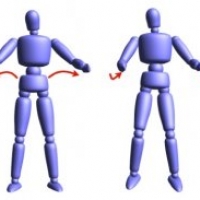

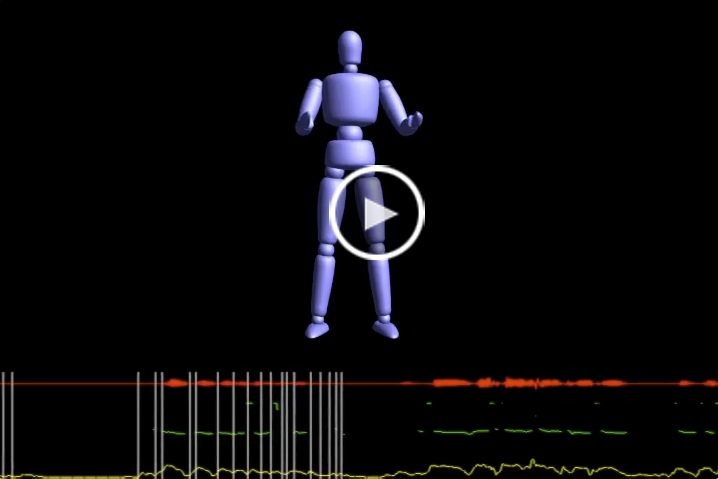

Human communication involves not only speech, but also a wide variety of gestures and body motions. Interactions in virtual environments often lack this multi-modal aspect of communication. We present a method for automatically synthesizing body language animations directly from the participants’ speech signals, without the need for additional input. Our system generates appropriate body language animations by selecting segments from motion capture data of real people in conversation. The synthesis can be performed progressively, with no advance knowledge of the utterance, making the system suitable for animating characters from live human speech. The selection is driven by a hidden Markov model and uses prosody-based features extracted from speech. The training phase is fully automatic and does not require hand-labeling of input data, and the synthesis phase is efficient enough to run in real time on live microphone input. User studies confirm that our method is able to produce realistic and compelling body language.