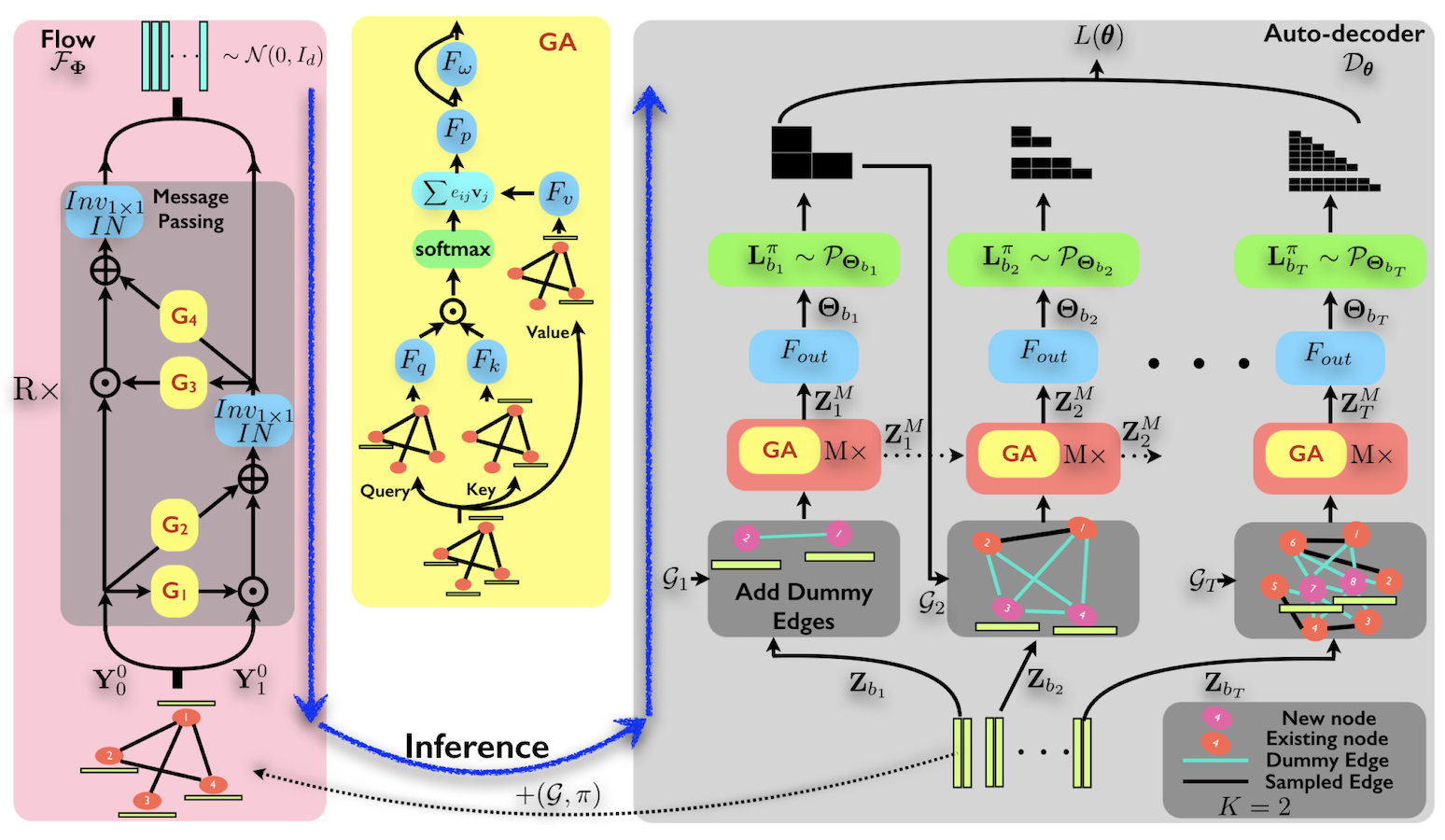

We present an approach to synthesizing new graph structures from empirically specified distributions. The generative model is an auto-decoder that learns to synthesize graphs from latent codes. The graph synthesis model is learned jointly with an empirical distribution over the latent codes. Graphs are synthesized using self-attention modules that are trained to identify likely connectivity patterns. Graph-based normalizing flows are used to sample latent codes from the distribution learned by the auto-decoder. The resulting model combines accuracy and scalability. On benchmark datasets of large graphs, the presented model outperforms the state of the art by a factor of 1.5 in mean accuracy and average rank across at least three different graph statistics, with a 2x speedup during inference.

Auto-decoding Graphs