Publications

Santhosh Kumar Ramakrishnan, Erik Wijmans, Philipp Kraehenbuehl, Vladlen Koltun

International Conference on Learning Representations (ICLR), 2025

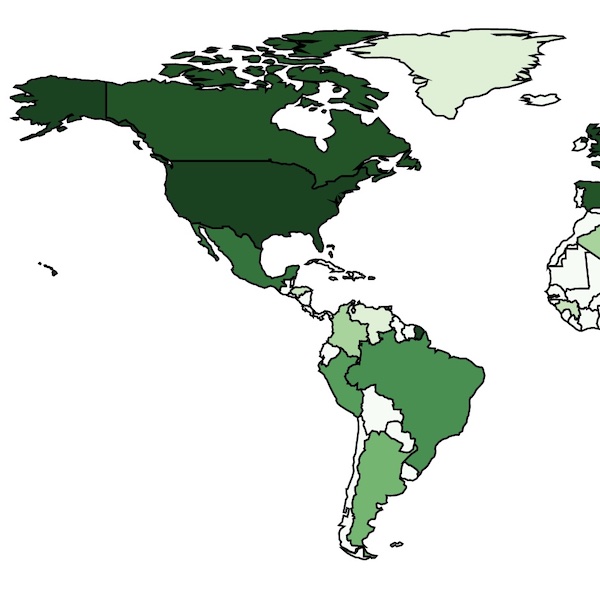

Erik Wijmans, Brody Huval, Alexander Hertzberg, Vladlen Koltun, Philipp Krähenbühl

International Conference on Learning Representations (ICLR), 2025

(Selected for oral presentation)

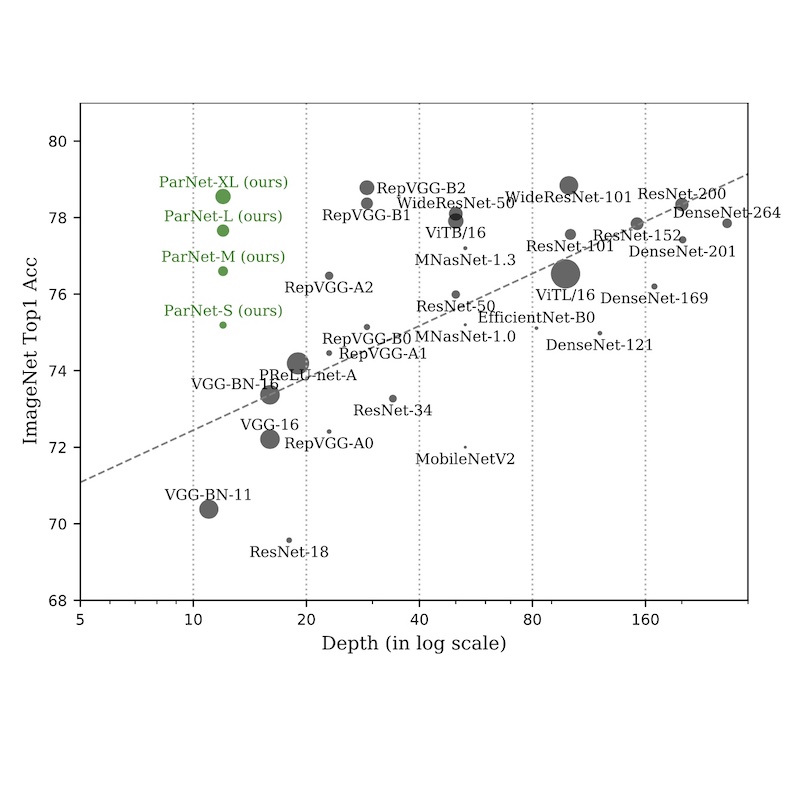

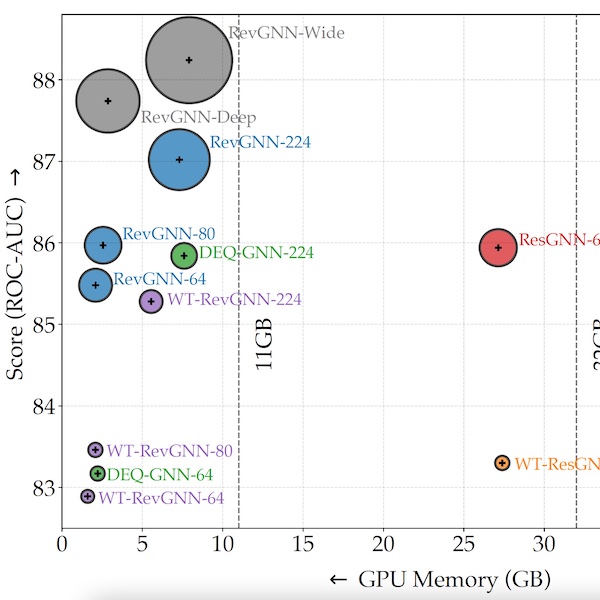

Ankit Goyal, Alexey Bochkovskiy, Jia Deng, and Vladlen Koltun

Advances in Neural Information Processing Systems (NeurIPS), 2022

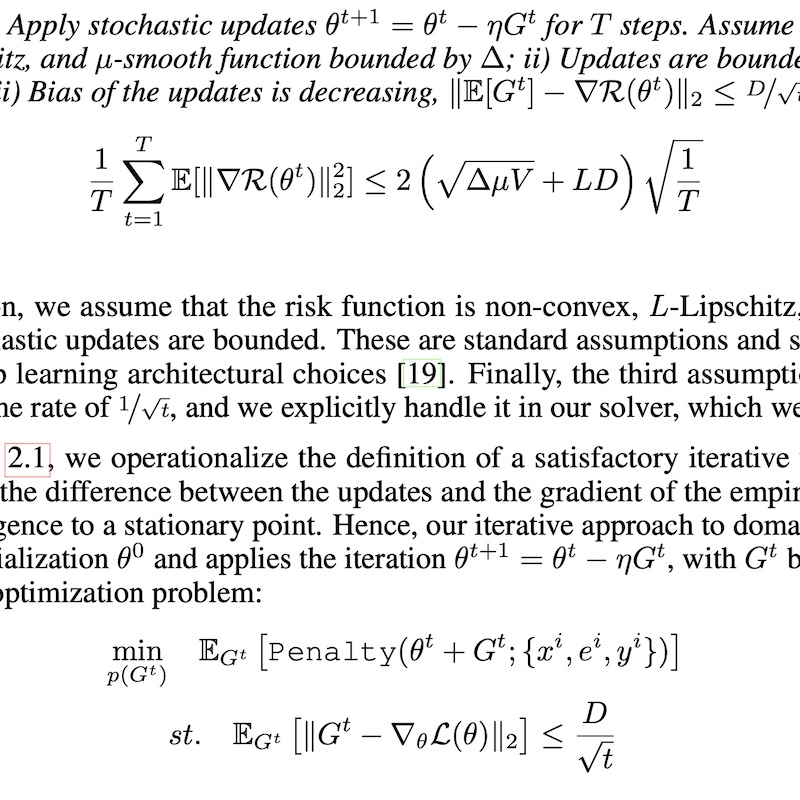

Ozan Sener and Vladlen Koltun

Advances in Neural Information Processing Systems (NeurIPS), 2022

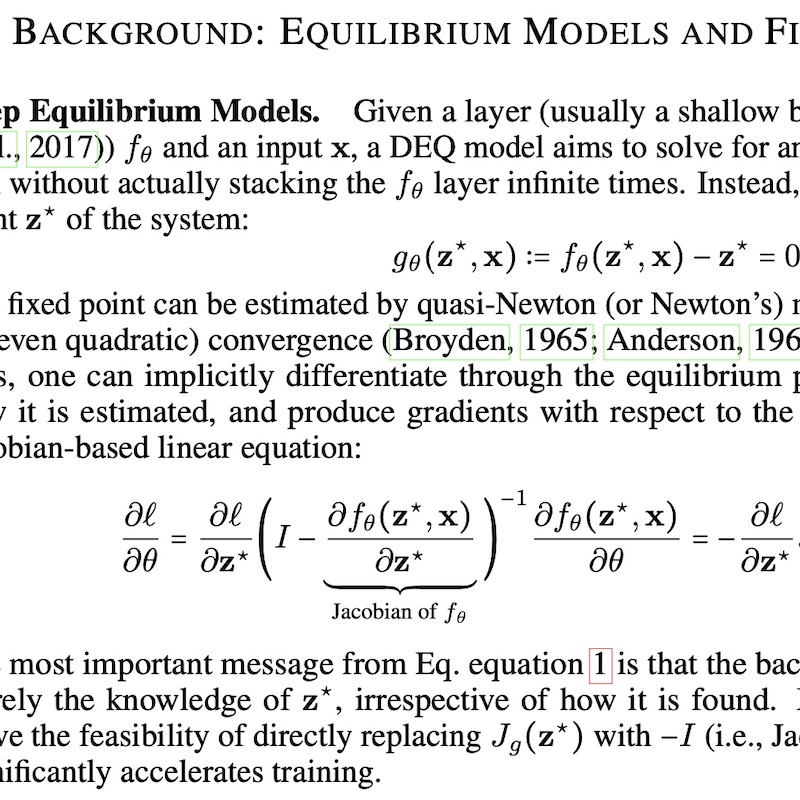

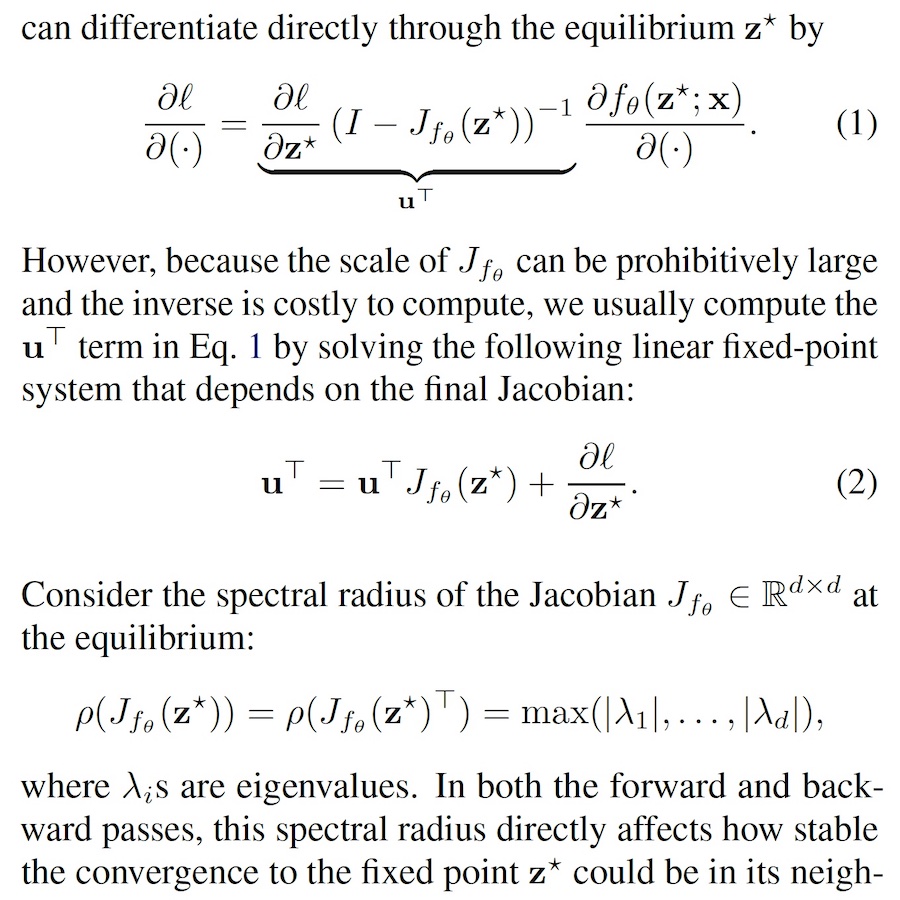

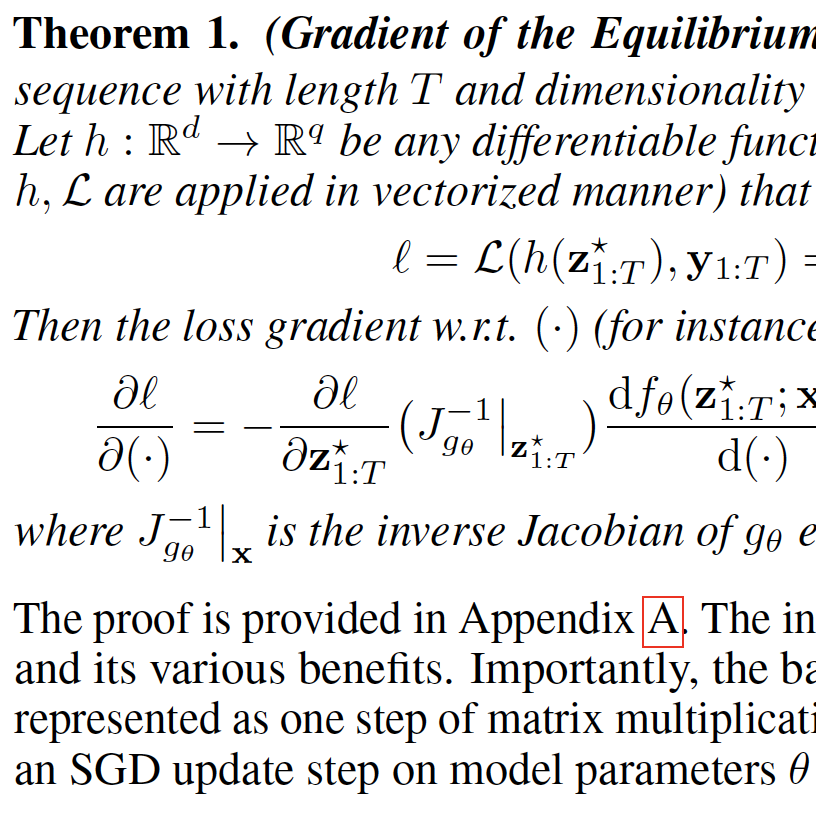

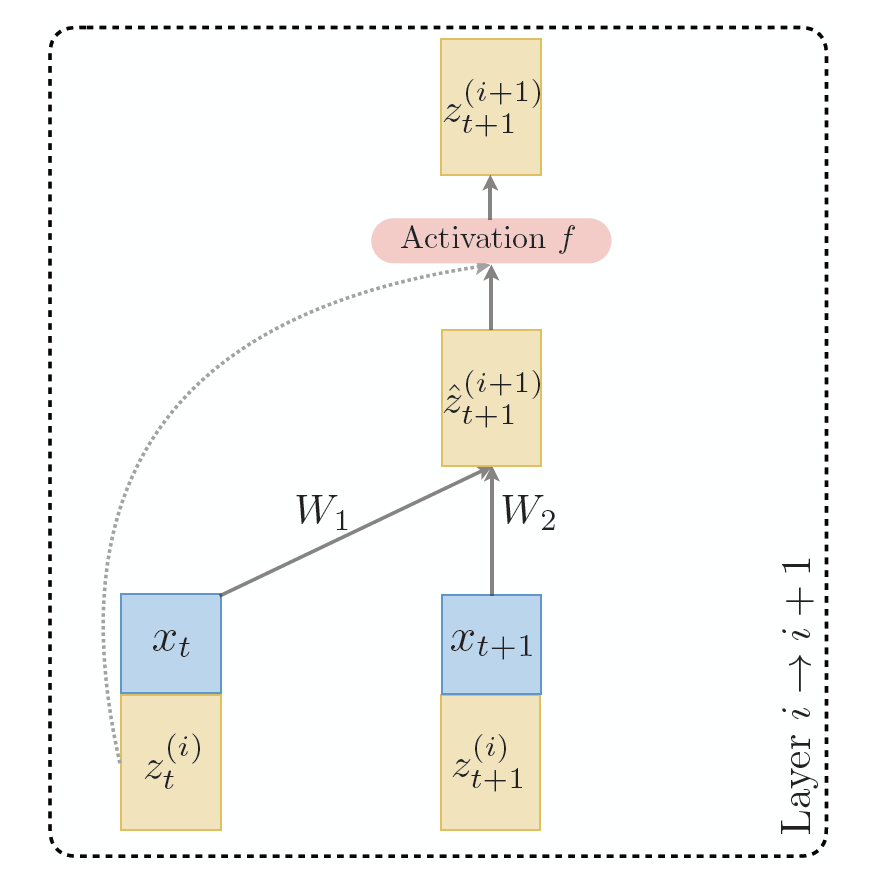

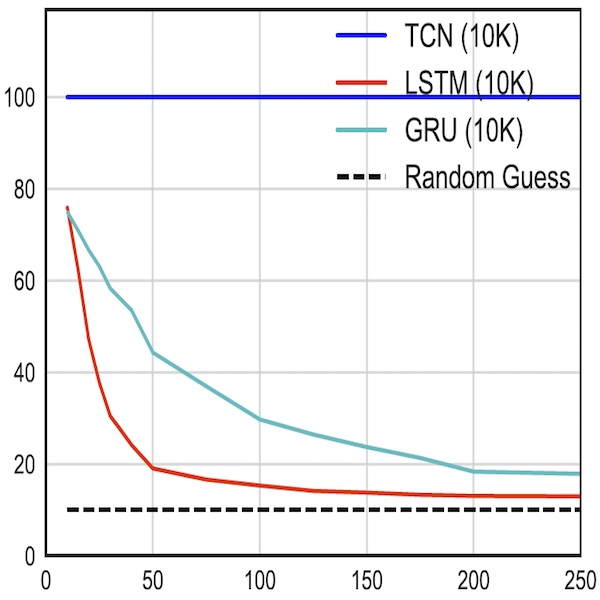

Shaojie Bai, Vladlen Koltun, and J. Zico Kolter

International Conference on Learning Representations (ICLR), 2022

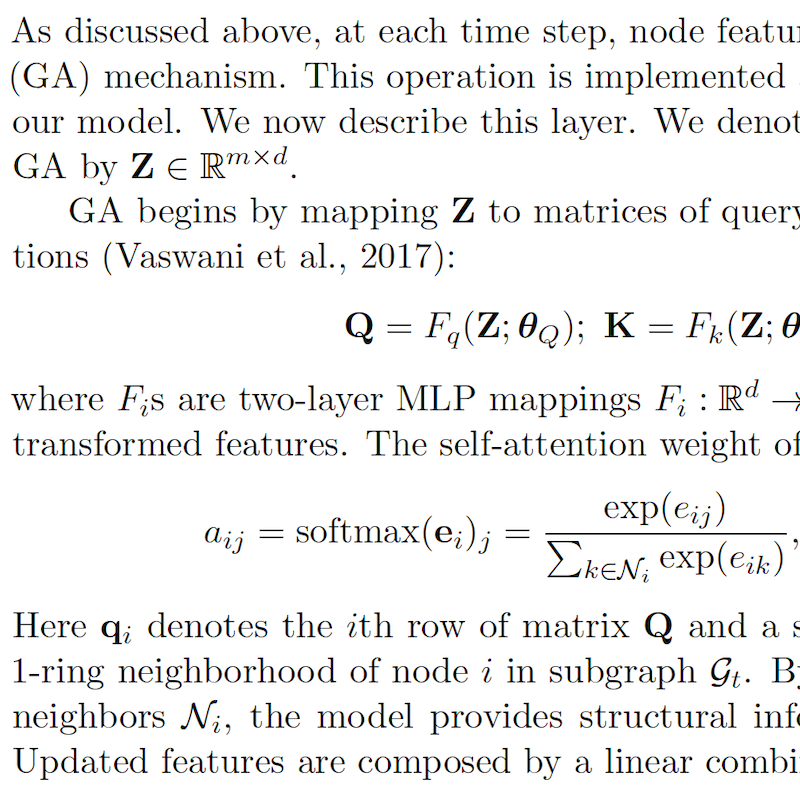

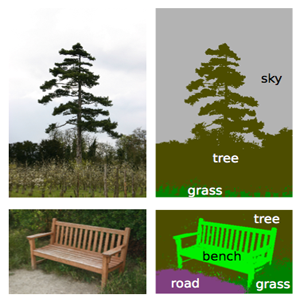

Zhipeng Cai, Ozan Sener, and Vladlen Koltun

International Conference on Computer Vision (ICCV), 2021

Shaojie Bai, Vladlen Koltun, and J. Zico Kolter

International Conference on Machine Learning (ICML), 2021

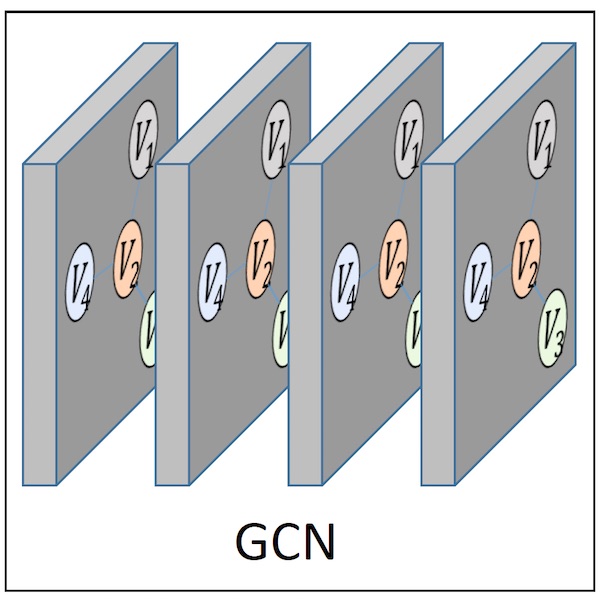

Guohao Li, Matthias Müller, Bernard Ghanem, and Vladlen Koltun

International Conference on Machine Learning (ICML), 2021

Hexiang Hu, Ozan Sener, Fei Sha, and Vladlen Koltun

IEEE Transactions on Pattern Analysis and Machine Intelligence, 45(5), 2023

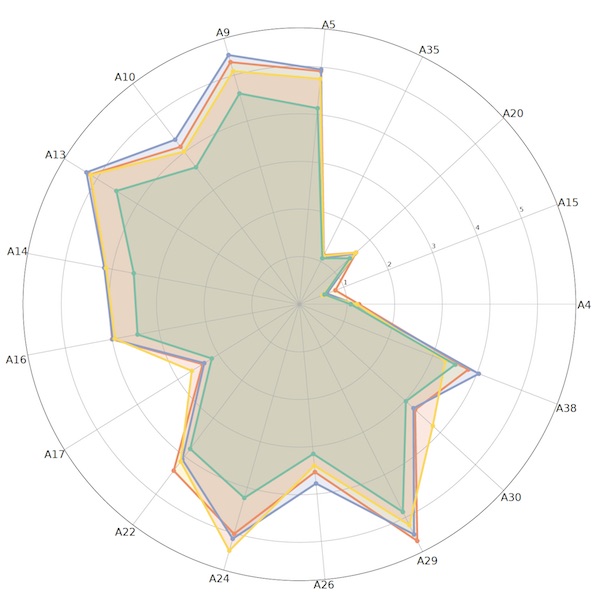

Sohil Atul Shah and Vladlen Koltun

Pre-print, arXiv:2006.02879, 2020

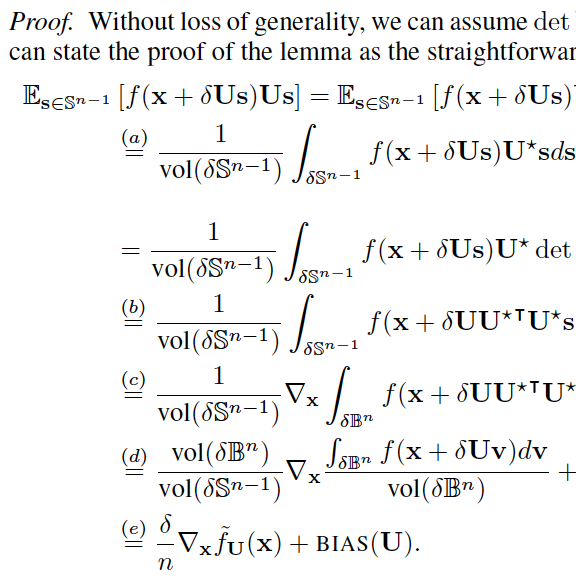

Shaojie Bai, Vladlen Koltun, and J. Zico Kolter

Advances in Neural Information Processing Systems (NeurIPS), 2020

(Selected for full oral presentation)

Ozan Sener and Vladlen Koltun

International Conference on Learning Representations (ICLR), 2020

Shaojie Bai, J. Zico Kolter, and Vladlen Koltun

Advances in Neural Information Processing Systems (NeurIPS), 2019

(Selected for spotlight oral presentation)

Brandon Amos, Vladlen Koltun, and J. Zico Kolter

Technical Report, arXiv:1906.08707, 2019

Shaojie Bai, J. Zico Kolter, and Vladlen Koltun

International Conference on Learning Representations (ICLR), 2019

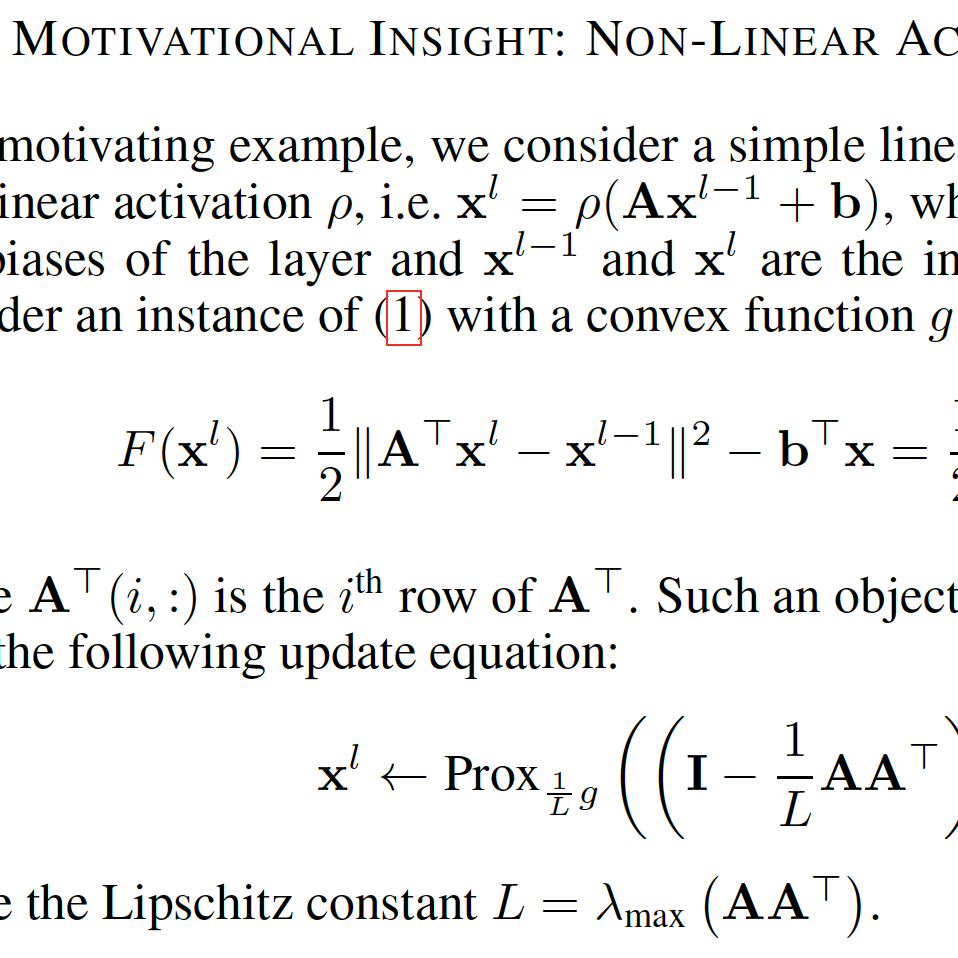

Adel Bibi, Bernard Ghanem, Vladlen Koltun, and René Ranftl

International Conference on Learning Representations (ICLR), 2019

Ozan Sener and Vladlen Koltun

Advances in Neural Information Processing Systems (NIPS), 2018

Zhuwen Li, Qifeng Chen, and Vladlen Koltun

Advances in Neural Information Processing Systems (NIPS), 2018

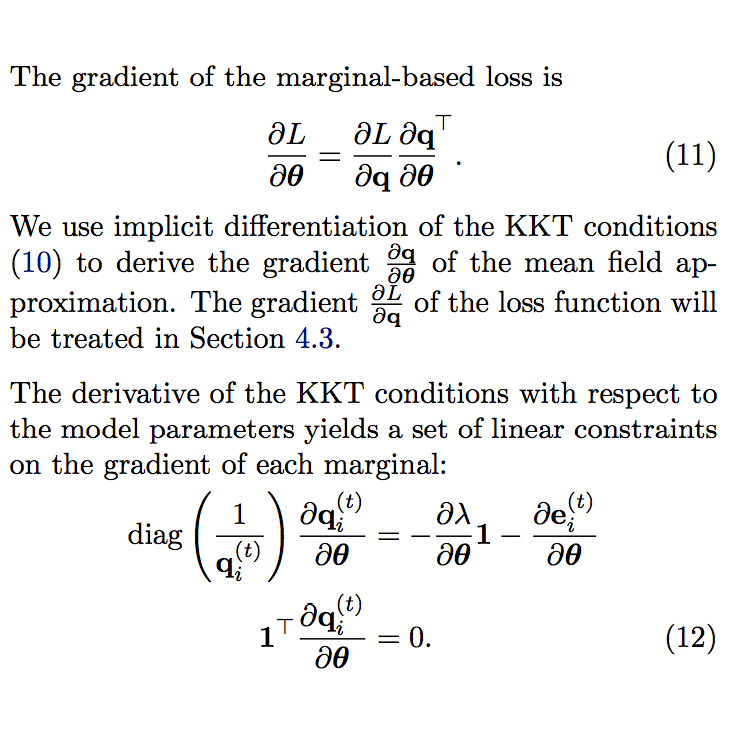

René Ranftl and Vladlen Koltun

European Conference on Computer Vision (ECCV), 2018

Sohil Atul Shah and Vladlen Koltun

Technical Report, arXiv:1803.01449, 2018

Shaojie Bai, J. Zico Kolter, and Vladlen Koltun

Technical Report, arXiv:1803.01271, 2018

Sohil Atul Shah and Vladlen Koltun

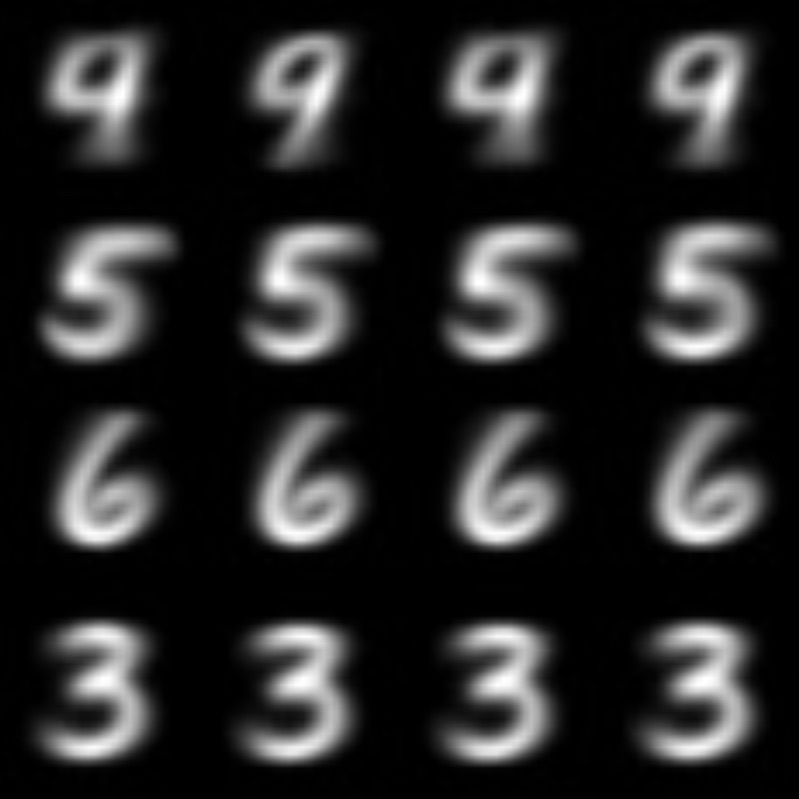

Proceedings of the National Academy of Sciences (PNAS), 114(37), 2017

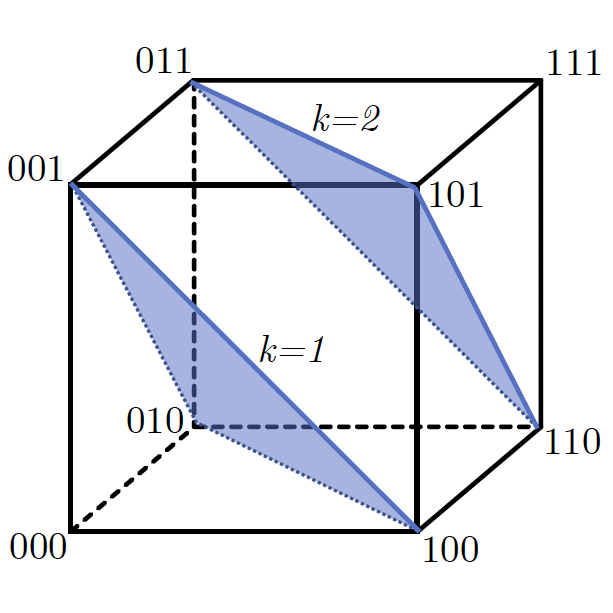

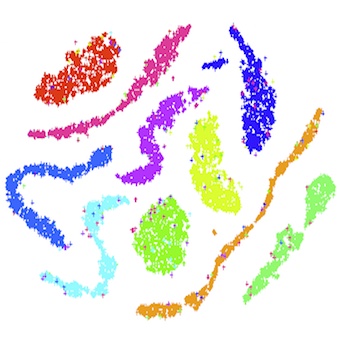

Philipp Krähenbühl and Vladlen Koltun

International Conference on Machine Learning (ICML), 2013

Philipp Krähenbühl and Vladlen Koltun

Advances in Neural Information Processing Systems, 2011 (Oustanding Student Paper Award)