Publications

Brennan Shacklett, Luc Guy Rosenzweig, Zhiqiang Xie, Bidipta Sarkar, Andrew Szot, Erik Wijmans, Vladlen Koltun, Dhruv Batra, Kayvon Fatahalian

ACM Transactions on Graphics, 42(4), 2023

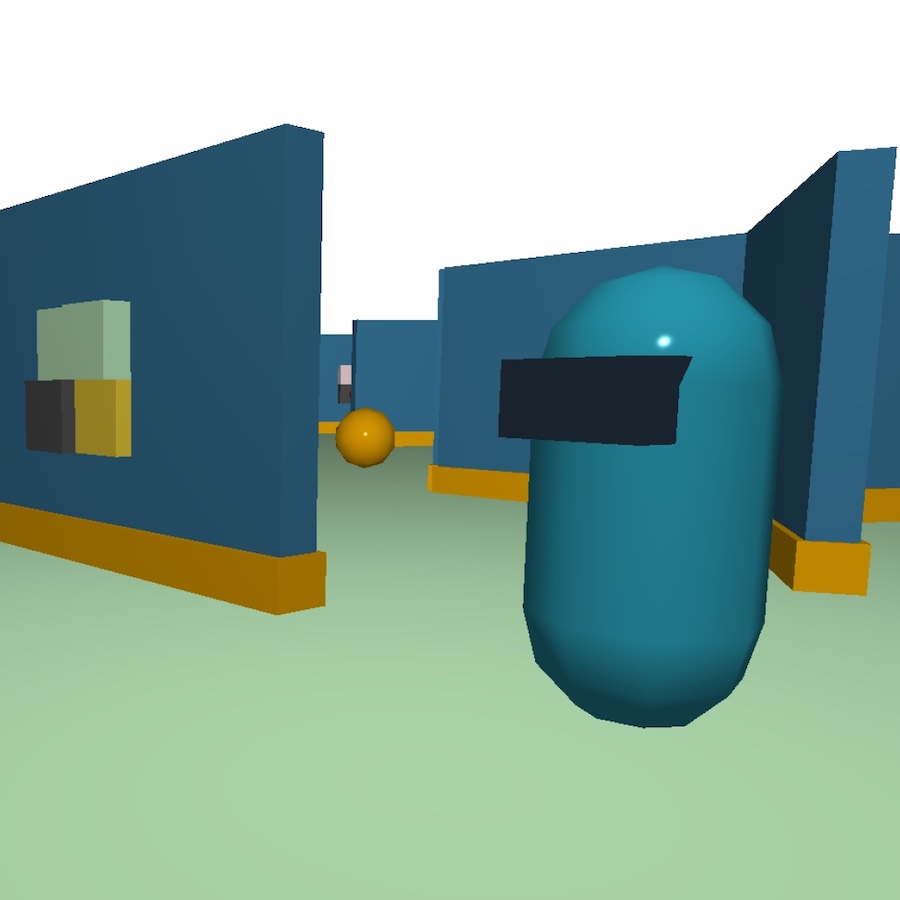

Andrew Szot, Alexander Clegg, Eric Undersander, Erik Wijmans, Yili Zhao, John M Turner, Noah D Maestre, Mustafa Mukadam, Devendra Singh Chaplot, Oleksandr Maksymets, Aaron Gokaslan, Vladimír Vondruš, Franziska Meier, Wojciech Galuba, Angel X Chang, Zsolt Kira, Vladlen Koltun, Jitendra Malik, Manolis Savva, Dhruv Batra

Advances in Neural Information Processing Systems (NeurIPS), 2021

(Selected for spotlight presentation)

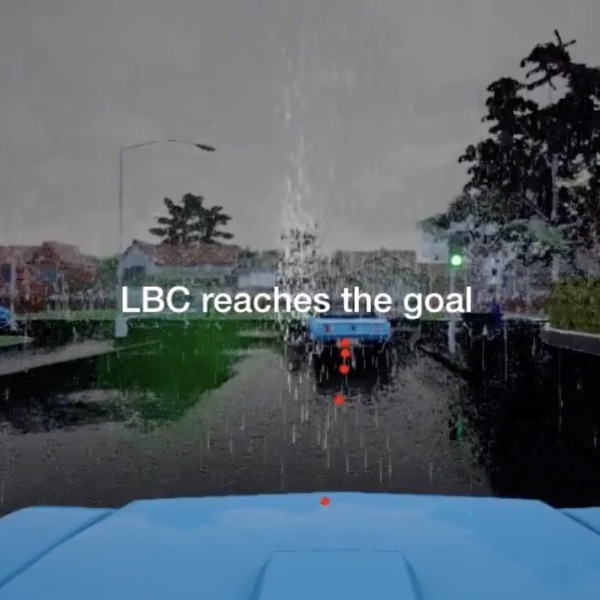

Dian Chen, Vladlen Koltun, and Philipp Krähenbühl

International Conference on Computer Vision (ICCV), 2021 (Selected for full oral presentation)

Aleksei Petrenko, Erik Wijmans, Brennan Shacklett, and Vladlen Koltun

International Conference on Machine Learning (ICML), 2021

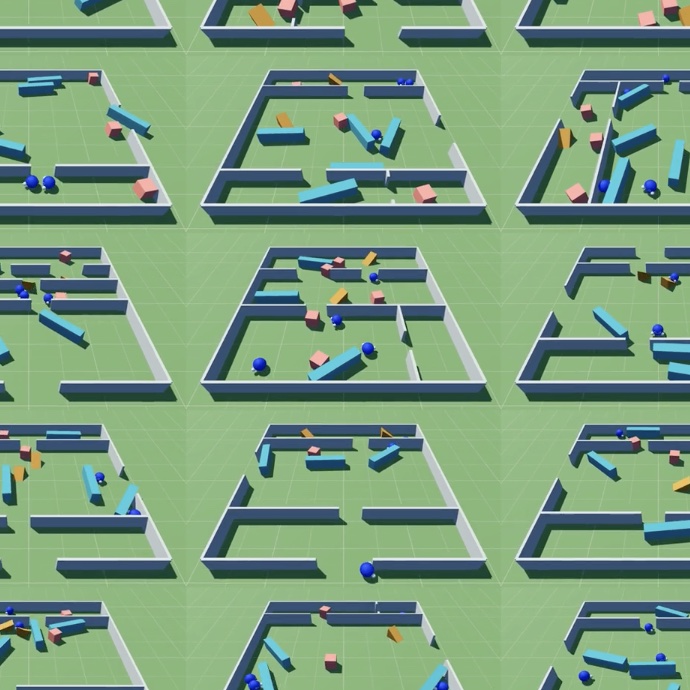

Brennan Shacklett, Erik Wijmans, Aleksei Petrenko, Manolis Savva, Dhruv Batra, Vladlen Koltun, Kayvon Fatahalian

International Conference on Learning Representations (ICLR), 2021

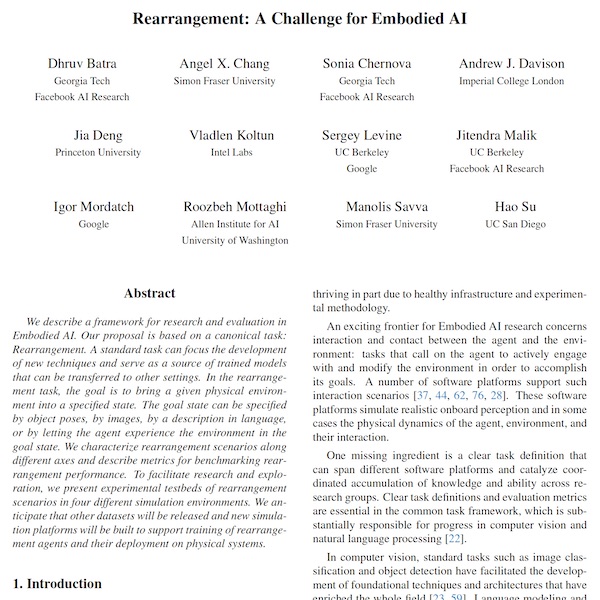

Dhruv Batra, Angel X. Chang, Sonia Chernova, Andrew J. Davison, Jia Deng, Vladlen Koltun, Sergey Levine, Jitendra Malik, Igor Mordatch, Roozbeh Mottaghi, Manolis Savva, Hao Su

Technical Report, arXiv:2011.01975, 2020

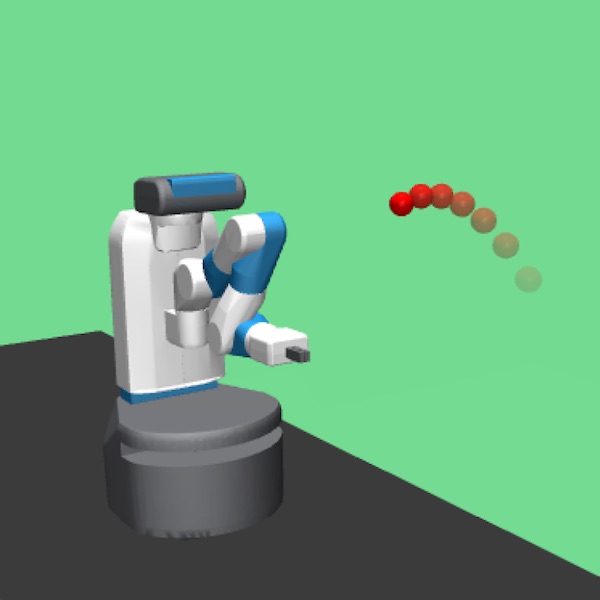

Aleksei Petrenko, Zhehui Huang, Tushar Kumar, Gaurav Sukhatme, and Vladlen Koltun

International Conference on Machine Learning (ICML), 2020

Dian Chen, Brady Zhou, Vladlen Koltun, and Philipp Krähenbühl

Conference on Robot Learning (CoRL), 2019

Manolis Savva, Abhishek Kadian, Oleksandr Maksymets, Yili Zhao, Erik Wijmans, Bhavana Jain, Julian Straub, Jia Liu, Vladlen Koltun, Jitendra Malik, Devi Parikh, Dhruv Batra

International Conference on Computer Vision (ICCV), 2019 (Nominated for the Best Paper Award)

Dmytro Mishkin, Alexey Dosovitskiy, and Vladlen Koltun

Technical Report, arXiv:1901.10915, 2019

Brady Zhou, Philipp Krähenbühl, and Vladlen Koltun

Science Robotics, 4(30), 2019

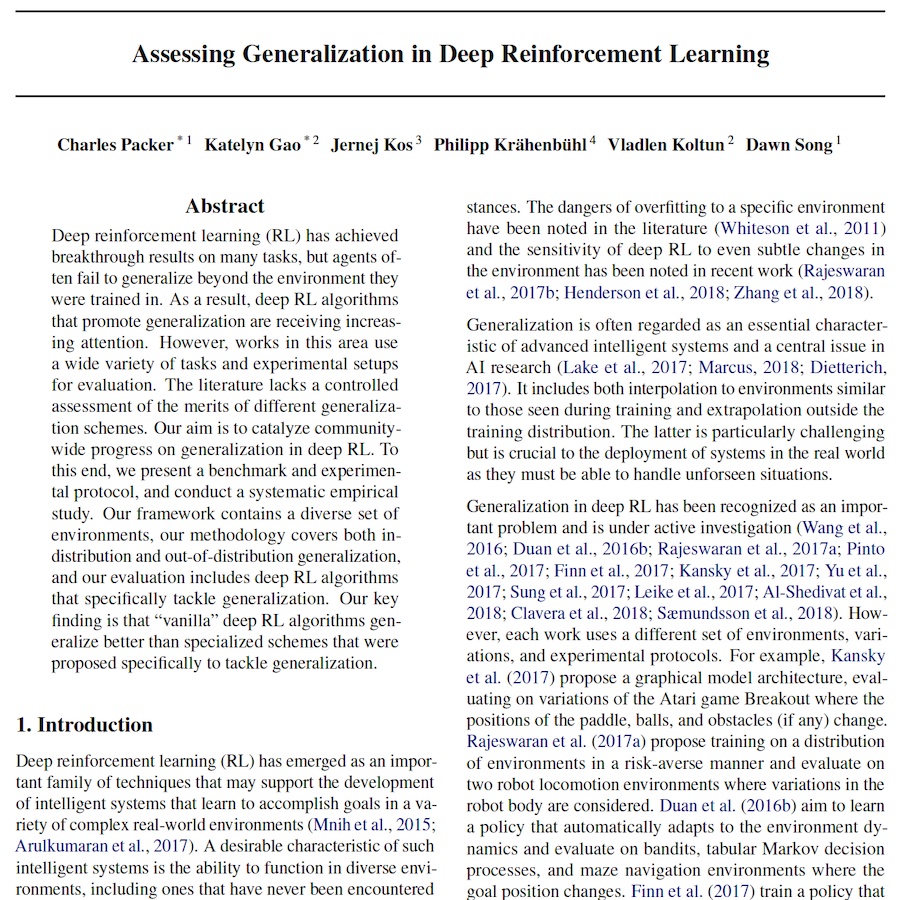

Charles Packer, Katelyn Gao, Jernej Kos, Philipp Krähenbühl, Vladlen Koltun, Dawn Song

Technical Report, arXiv:1810.12282, 2019

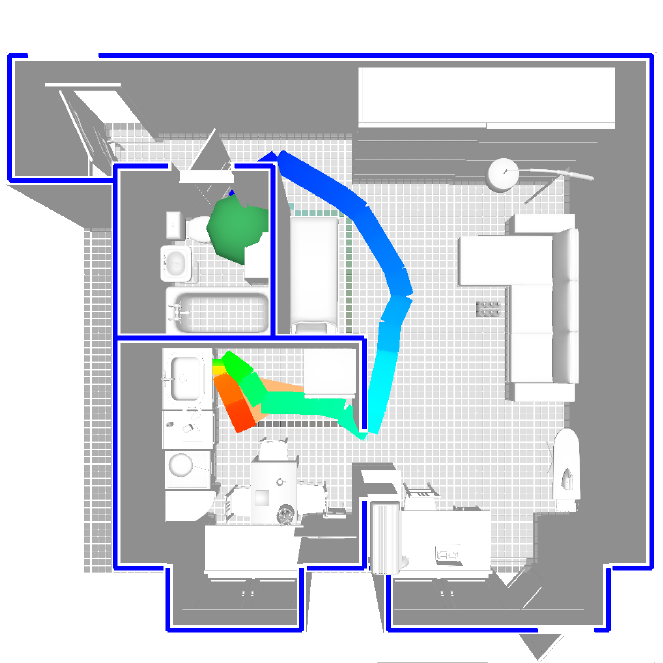

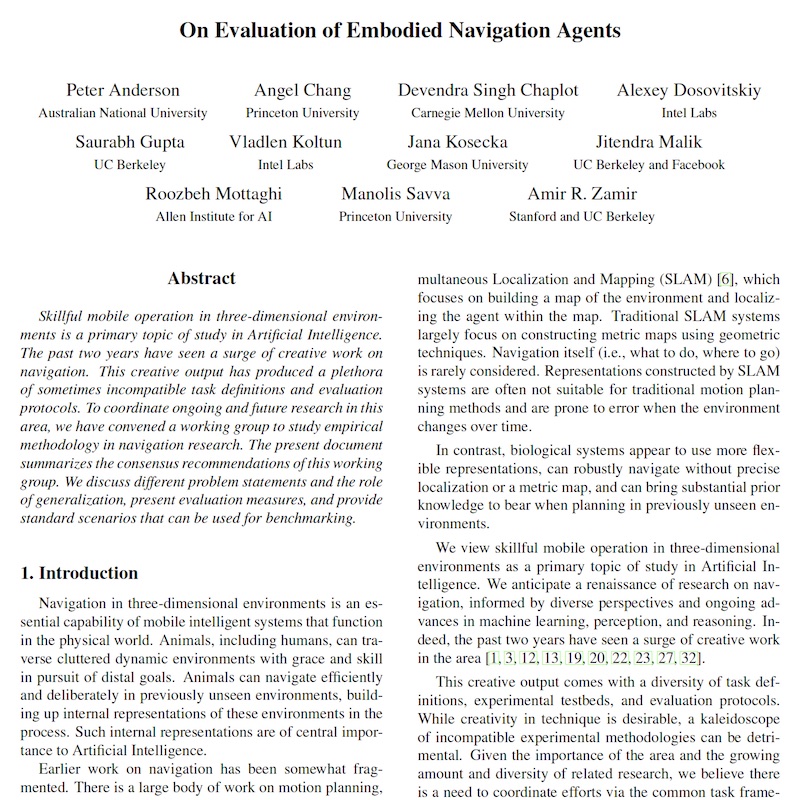

Peter Anderson, Angel Chang, Devendra Singh Chaplot, Alexey Dosovitskiy, Saurabh Gupta, Vladlen Koltun, Jana Kosecka, Jitendra Malik, Roozbeh Mottaghi, Manolis Savva, Amir R. Zamir

Technical Report, arXiv:1807.06757, 2018

Artemij Amiranashvili, Alexey Dosovitskiy, Vladlen Koltun, and Thomas Brox

Conference on Robot Learning (CoRL), 2018

Matthias Müller, Alexey Dosovitskiy, Bernard Ghanem, and Vladlen Koltun

Conference on Robot Learning (CoRL), 2018

Felipe Codevilla, Antonio López, Vladlen Koltun, and Alexey Dosovitskiy

European Conference on Computer Vision (ECCV), 2018

Nikolay Savinov, Alexey Dosovitskiy, and Vladlen Koltun

International Conference on Learning Representations (ICLR), 2018

Artemij Amiranashvili, Alexey Dosovitskiy, Vladlen Koltun, and Thomas Brox

International Conference on Learning Representations (ICLR), 2018

Felipe Codevilla, Matthias Müller, Antonio López, Vladlen Koltun, and Alexey Dosovitskiy

International Conference on Robotics and Automation (ICRA), 2018

Manolis Savva, Angel X. Chang, Alexey Dosovitskiy, Thomas Funkhouser, and Vladlen Koltun

Technical Report, arXiv:1712.03931, 2017

Alexey Dosovitskiy, German Ros, Felipe Codevilla, Antonio López, and Vladlen Koltun

Conference on Robot Learning (CoRL), 2017

Alexey Dosovitskiy and Vladlen Koltun

International Conference on Learning Representations (ICLR), 2017 (Selected for full oral presentation)