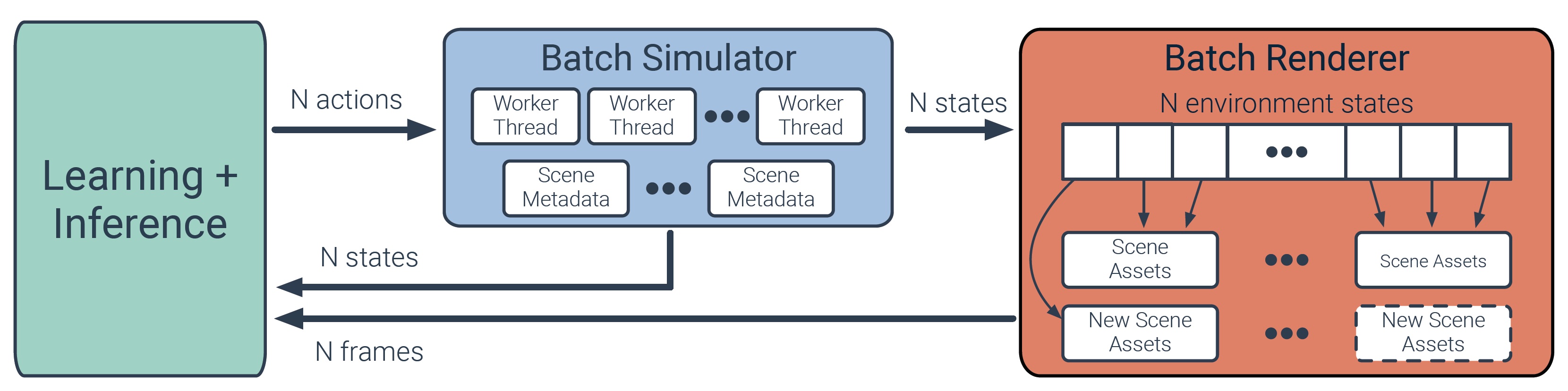

We accelerate deep reinforcement learning based training in visually complex 3D environments by two orders of magnitude over prior work, realizing end-to-end training speeds of over 19,000 frames of experience per second on a single GPU (and up to 72,000 frames per second on a single eight-GPU machine). The key idea of our approach is to design a 3D renderer and environment simulator around the principle of “batch simulation”: accepting and executing large batches of requests simultaneously. Beyond exposing large amounts of work at once, batch simulation allows simulator implementations to amortize in-memory storage of scene assets, rendering work, data loading, and synchronization costs across many simulation requests, dramatically improving the number of simulated agents per GPU and overall simulation throughput. To balance DNN inference and training costs with faster simulation, we also build a computationally efficient policy DNN that maintains high task performance, and modify training algorithms to maintain sample efficiency when training with large mini-batches. By combining batch simulation and DNN performance optimizations, we demonstrate that PointGoal navigation agents can be trained in complex 3D environments on a single GPU in 1.5 days to 97% of the accuracy of agents trained on a prior state-of-the-art system using a 64-GPU cluster over three days. We provide open-source reference implementations of our batch 3D renderer and simulator to facilitate incorporation of these ideas into current and future RL systems.

Large Batch Simulation for Deep Reinforcement Learning