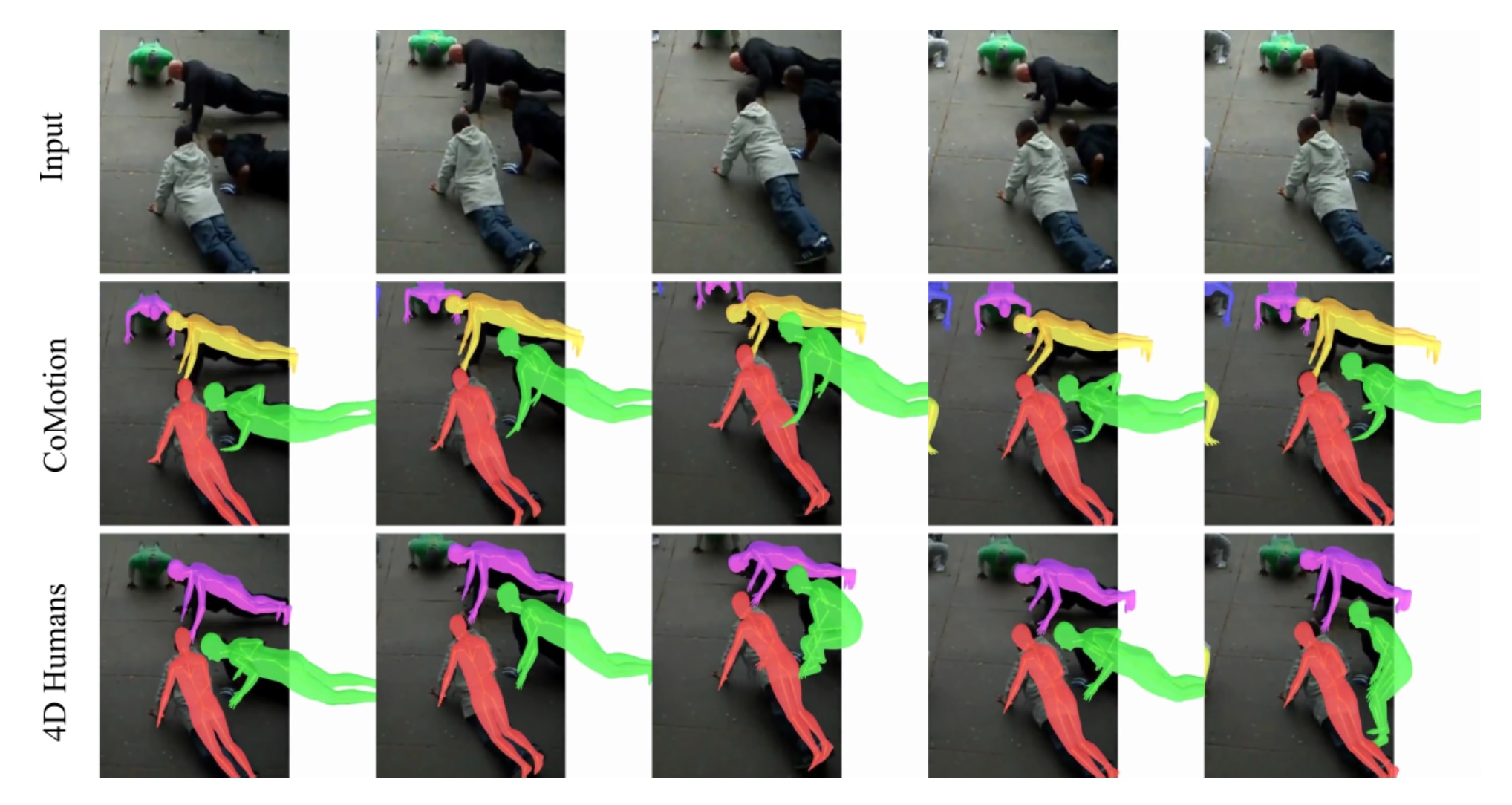

We introduce an approach for tracking detailed 3D poses of multiple people from a single monocular camera stream. Our system maintains temporally coherent predictions in crowded scenes filled with difficult poses and occlusions. Rather than detect poses and associate them to current tracks, our model directly updates all tracked poses simultaneously given a new input image. We train on numerous single-image and video datasets with both 2D and 3D annotations to produce a model that matches the 3D pose estimation quality of state-of-the-art systems while performing faster and more accurate tracking on in-the-wild videos.

CoMotion: Concurrent Multi-person 3D Motion